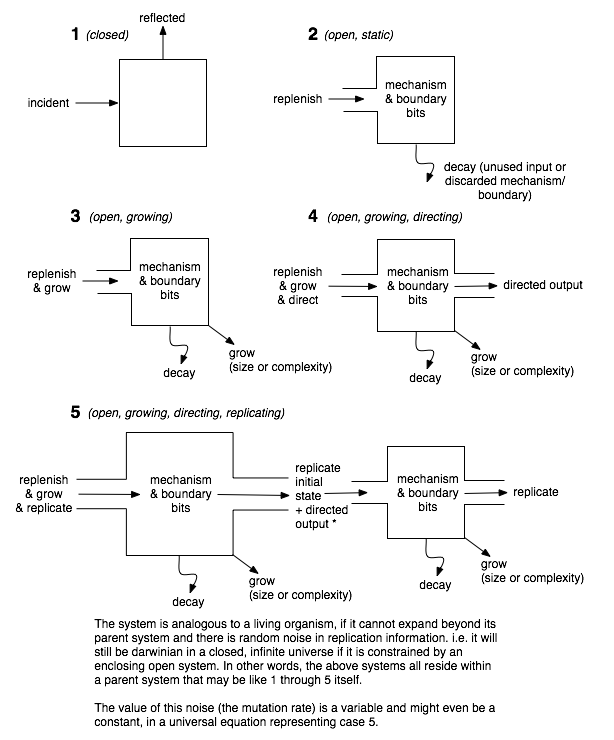

Types of Systems

This is an attempt to create a series of simple diagrams that describe archetypal types of systems and their components.

The notion of ‘systems’ here has little to do with topics such as General Systems Theory which are broad in scope, but more to do with classifying particular types of interaction in terms the way information interacts, changes or flows through them.

What is a system?

In every day language a system can be a set of rules or a thing, a process or a machine it can describe anything from a process for doing the dishes to a Transit system. For our purposes a system means something which is either partially or fully isolated from its environment or other systems, and therefore has a boundary or membrane.

A system may contain a complex mechanism or nothing at all, and it may allow things, energy or information to enter it and optionally to leave it. There may be a change in the quality and quantity of what enters and leaves the system, but such that the 2nd Law of Thermodynamics holds.

Further, the 2nd Law of Thermodynamics is expressed here as the necessary interaction between systems to eliminate difference via an entropy gradient. This makes a lower entropy system increase its overall entropy and a higher entropy system reduce its overall entropy, such that the total entropy measure (a measured by a third party) will always increase i.e. what can flow will flow.

Entropy cannot merely stay the same after interaction between two systems, since ‘something has happened’ and the overall state has changed, states cannot have the same entropy and therefore entropy must have increased.

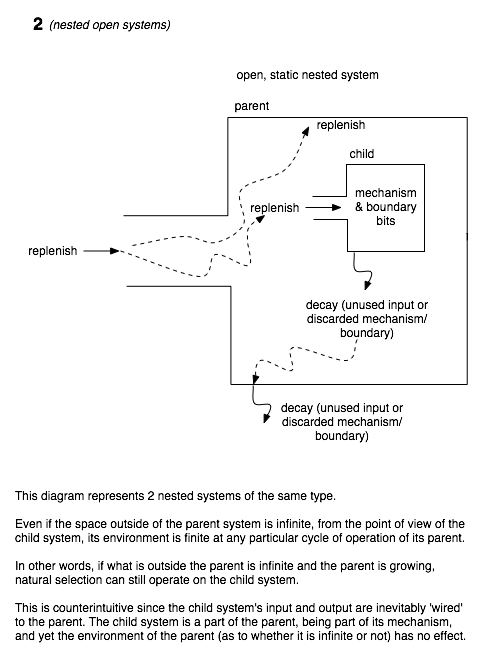

Since we define systems as things which can be nested, the environment is a system, just like any other.

[ flesh this out: A note about 3rd party measurements.In assuming that the 2nd law of thermodynamics holds for all observers, it means that if there is a message exchange between two systems both systems will perceive overall entropy increase and so will a third party observing them.

As a thought experiment, if the systems consist of a person who knows something telling it to someone who doesn’t:

System (a), sender S,

System (b), recipient: R

System (c), observer: O

There is no background Environment system.

S is a two bit system consisting of a green bit and a red bit.

R is a two bit system consisting of two red bits.

Q 1: which way does the information flow, if it flows fro S -> R then it assumes that green bits are lower entropy than red. But if this is the case, then green bits are course grained, i.e. they are actually macrostates consisting of of more than 1 bit.

From a third party observer’s perspective, the information difference between them would appear to be reduced by one bit, i.e. the algorithmic complexity required to describe both systems reduces by one bit, because the two interacting system as now 1 bit more similar, due to shared knowledge.

i.e.

2 bits have synced and nothing has physically been transferred – the entropy appears to have and difference is eliminated (macrostates have increased in granularity). This is merely a variant of Maxwell’s Demon

From the information sender’s or receiver’s perspective the elimination of macrostates is different, however (assuming the sender/recipient are not conscious beings) ]

Both these uses describe a collection of entities connected together in some organized fashion to create an integrated whole.

Once there exist systems with multiple bits, various types of systems naturally evolve, providing there is noisy information exchange between systems of finite size (variable inheritance within a constrained environment).

These systems culminate in the type we refer to as living, in which self-replication creates an enormous diversity of systems which evolve by natural selection as a result of the laws governing noisy information exchange between finite systems.

Living systems are a particular type of system within a hierarchy of different types. Some examples of real world systems that correspond to the schematic representations of some of these, which are shown below, include:

Type 1: ‘Billiard ball’ like particles and elastic collisions, representing thermodynamic interaction, where each type 1 system is particle. In other words there is no interior mechanism for these types of system, and interaction can be characterized by information being reflected off the system boundary.

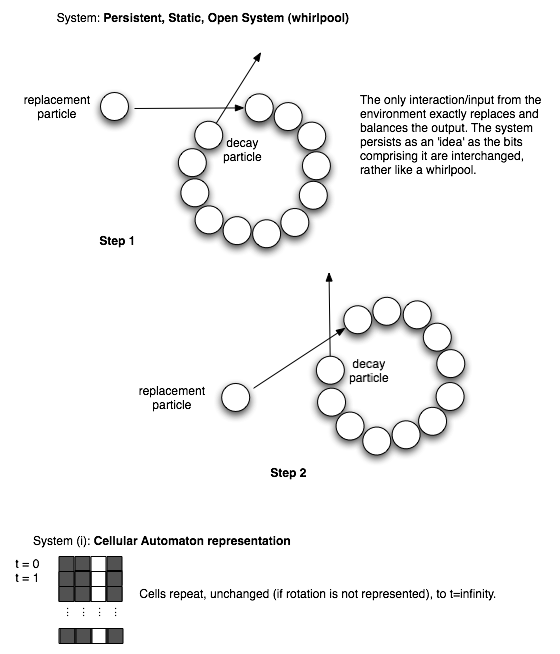

Type 2: A whirlpool

Type 3. A machine

Type 4: A universal cellular automaton

Type 5: A universal Turing Machine

Type 6: A living organism

A more detailed example of a type 2 (open, static) system:

Nested systems: (The environment need not be a closed system)

The diagram below shows a schematic model for how any type of system may be nested, such that Information measures are only relative to the immediate parent system, which need not be closed.

There is no upper limit to this taxonomy, and no implicit hierarchy. For example, since a system which is a Universal Turing Machine (type 5) can be programmed by an external system to mimic any machine with a fixed function which is hardwired (type 3), above and beyond this one can imagine a hypothetical system (type 7) which is defined as one which can program itself independently of external influences (this might be a good model for self-awareness).

Type 7 systems do not need to be self replicating (Type 6) hence this is not a hierarchical taxonomy but a graph of features and components that can be used to categorize and analyze any system where there is a flow of energy, or more specifically, entropy.

Notes:

The role of Feedback, in any open system:

Input can be continuous or cyclical or one-off.

Output from the system will effect to a greater or lesser degree the input, depending on the size of the ‘world system’.

refs: http://en.wikipedia.org/wiki/General_systems_theory

Lotka

“Lotka proposed the theory that the Darwinian concept of natural selection could be quantified as a physical law. The law that he proposed was that the selective principle of evolution was one which favored the maximum useful energy flow transformation. The general systems ecologist Howard T. Odum later applied Lotka’s proposal as a central guiding feature of his work in ecosystems ecology. Odum called Lotka’s law the maximum power principle.”

A.J.Lotka (1922b) ‘Natural selection as a physical principle‘ [PDF]. Proc Natl Acad Sci, 8, pp 151–54.

H.T. Odum (slightly crazy)

“In the 1950s Odum introduced his electrical circuit diagrams of ecosystems to the Ecological Society of America. He claimed that energy was driven through ecological systems by an “ecoforce” analogous to the role of voltage in electrical circuits.”

This seems no different from a course-grained view of entropy flow.

Kangas (2004, p.101): “In the 1950s and the 1960s H.T.Odum used simple electrical networks composed of batteries, wires, resistors and capacitors as models for ecological systems. These circuits were called passive analogs to differentiate them from operational analog computer circuits, which simulated systems in a different manner.”

“In a controversial move, Odum, together with Richard Pinkerton (at the time physicist at the University of Florida), was motivated by Alfred J. Lotka’s articles on the energetics of evolution, and subsequently proposed the theory that natural systems tend to operate at an efficiency that produces the maximum power output, not the maximum efficiency. [32] This theory in turn motivated Odum to propose maximum power as a fundamental thermodynamic law. Further to this Odum also mooted two more additional thermodynamic laws (see Energetics), but there is far from consensus in the scientific community about these proposals, and many scientists have never heard of H.T.Odum or his views.”

By the end of the 60s Odum Developed, a symbolic language to describe ecosystems in terms of energy flow: Energy Systems Language. The language was called Energy Systems Language or Energese.

Complex adaptive systems

Complex adaptive systems can change and learn from experience. A CAS is a complex, self-similar collection of interacting adaptive agents. The agents as well as the system are adaptive: hence self-similar or it would be a a pure multi-agent system (MAS).

“CAS ideas and models are essentially evolutionary, grounded in modern biological views on adaptation and evolution. The theory of complex adaptive systems bridges developments of systems theory with the ideas of generalized Darwinism, which suggests that Darwinian principles of evolution can explain a range of complex material phenomena, from cosmic to social objects.”

Kevin Dooley definition of CAS

“A CAS behaves/evolves according to three key principles: order is emergent as opposed to predetermined (c.f. Neural Networks), the system’s history is irreversible, and the system’s future is often unpredictable. The basic building blocks of the CAS are agents. Agents scan their environment and develop schema representing interpretive and action rules. These schema are subject to change and evolution.”

“some artificial life simulations have suggested that the generation of CAS (evolution possessing an active trend towards complexity) is an inescapable feature of evolution…However, the idea of a general trend towards complexity in evolution can also be explained through a passive process…the apparent trend towards more complex organisms is an illusion resulting from concentrating on the small number of large, very complex organisms that inhabit the right-hand tail of the complexity distribution and ignoring simpler and much more common organisms…This lack of an overall trend towards complexity in biology does not preclude the existence of forces driving systems towards complexity in a subset of cases.”

Adami C, Ofria C, Collier TC (2000). “Evolution of biological complexity“. Proc. Natl. Acad. Sci. U.S.A. 97 (9): 4463–8. doi:10.1073/pnas.97.9.4463. PMID 10781045. http://www.pnas.org/cgi/content/full/97/9/4463.

http://en.wikipedia.org/wiki/Portal:Systems_science