How systems self-emerge and self-configure for information exchange from 0 to 1 to n bits.

How these systems necessarily culminate in the complexity and diversity of living things as a result of rules governing information theory, where natural selection is a specific case of the laws governing noisy information exchange between finite sized systems.

Introduction

All bits are relative

If you have a machine which can store and process bits of information, then at some point, these bits have some physical existence, this in turn requires they reside in a ‘bit space’ of information that describes the attributes of the physical space itself that a machine occupies. These bits cannot be part of the machine. This implies that information cannot be processed at a fundamental limit with infinite efficiency and no machine can completely describe itself. (This is merely a form of Russell’s paradox).

From this point alone, you could argue that information is always incomplete, or relative to the base units used to describe the information space itself. However, at a less abstract level, in every day experience, ‘bits’ of information are encoded using all sorts of bits, residing in bit spaces that can be subdivided, from a digit made of many bulbs on a billboard to many pixels on a computer screen.

Bits split in more bits

The notes below try to suggest that information only ever exists as a relative measure of difference between two systems (there is no fundamental background independent bit – without begging the question of how can it be described without requiring other bits), and that this relative measure changes each time a message is exchanged.

As systems exchange information, the total number of bits in a receiving system may possibly increase by changing the type of bit, i.e. adjusting the relative size and granularity (coarse-graining), rather than creating something out of nothing. This increases the amount of information that can be transferred at each step. In other words, systems learn.

The relative nature of information and the process of learning, is commonplace. A book printed in Chinese may look like a lot of random symbols to a non Chinese speaker. How much information can be gleaned from the book, depends on fluency. In other words, the perception of information entropy varies according to the knowledge of the measuring system. The number of bits that can be processed is dependent on the number of bits in the processor and this is based on the past history of information learned. Again this is not controversial its merely a reference to entropy and macrostates,

The notion of bit granularity, bits made from other bits, is also familiar. A giant billboard showing the score: ‘1’, at a sports event. If you sit nearer the billboard you may be able to see that the single ‘bit’ of information, the 1, is made up of hundreds of lamps. hundreds of bits are required to display 1 bit. The inefficiency of the message encoding is obvious to people who sit closer and are able to see it.

A person learns to read by storing and processing knowledge, and the rate of processing seems to be dependent on the amount stored. It seems that the more you learn the more you can learn. Once a child knows how to read they can process information more quickly and learn more rapidly.

Evolution of Message Exchange Between two Systems

What would we have to show to describe how this bootstrapping for knowledge processing arises?

If we can:

1.) create an underpinning theory of how 0 bits leads to 1 bit (i.e. how systems bootstrap information exchange to begin with)

2.) describe the process of learning in terms of information theory (i.e. how 1 bit inevitably leads to 2, leads to 3), and can then:

3.) show how information exchange between systems of n bits necessarily allows for the creation of a Universal Turing Machine which can contain nested subsystems that have vary over time according to varying rules within an finite universe.

Then: a macroscopic model of complex systems operating under natural selection, can be derived, based upon first principals of information theory. This model could show how any information exchange will eventually self configure to produce systems whose operation is indistinguishable from living organisms.

1. From 0 bits to 1 bit. How systems bootstrap for information exchange

Modulation

To be able to process a first bit, a ‘bit space’ needs to be established, i.e. a notion of ‘something and not something’, a 1 and a !1. A bit space is the physical location of a piece of information, and this itself requires information to locate it in a phase space. In an analog environment, this implies some kind of modulation, to create an on/off clock cycle. There are plenty of examples of natural occurrences of this, from the on and off of day and night, as the earth spins, to the cyclical warming and cooling as convection currents flow near deep sea vents. It might not be too fanciful to imagine that the modulation effect of these systems had some role in instigating information exchange, from 0 bits to 1 bit, to weather to creatures.

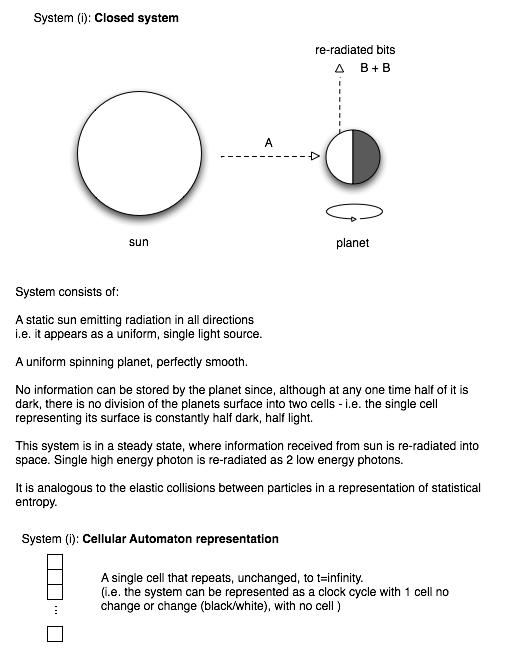

A Crude Sun/Earth Model as an Example of Message Space Configuration

In order for a system to be able to exchange information, there needs to be a ‘clock cycle’ and the ability to receive 1 bit.

To receive one bit, the system must have the notion of a lack of a bit, i.e. a message space with 2 options: [1 OR !1]. It seems that a schematic spinning earth with a sun shining on it, may provide a simple mechanism for this through the modulated signal of light/dark.

As shown below, the notion of light and dark itself, does not allow for the exchange of information, unless there is a mechanism whereby the diagram of the earth becomes split into a light and a dark hemisphere, which in turn rotates into light dark. In other words, a mechanism where one half of the earth has light burned into it to create a stored ‘image’ of one bit, while the other half does not.

The above, shows how the bit space is configured, but does not show how a bit is transferred and stored.

2. From 1 bit to 2 bits to N bits. How Systems Self Learn

Assumption 1: Information that can flow will flow.

Information will always flow between systems, if it can, resulting in state change. If the positions and momenta of particles are represented numerically, the number of bits required to do so always increases over time. Eventually the number of bits required to describe the system is the same as the number of components (and individual states of each component) i.e. it is incompressible and has entropy = log number of possible states. The entropy of the system tends to the maximum Kolmogorov complexity at which point there are no subsystems within the parent system, capable of interacting.

Assumption 2: State change tends to result in increase in overall entropy

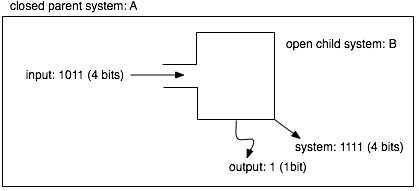

Number of bits required to describe an open system plus number of bits it outputs > number of input bits.

i.e. below: 4 + 1 > 4

3. Since the total energy of A is constant, the output bit is of a different type to the input bit (in terms of energy per bit).

4. Since bits are by definition quantized, the input bits must be a whole number of the output bits.

5. over many cycles, the granularity of bits that can be processed increases.

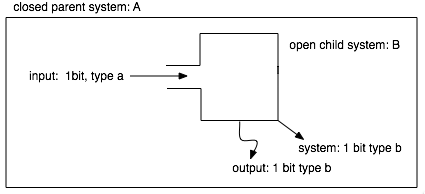

i.e.

A future cycle of the above system would look like:

1 type a bit = 2 type b bits = 4 type c bits. The bit granularity tends to increase at each step. In its most extreme case, number of cycles (messages) = log number of possible messages.

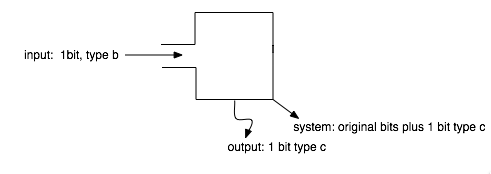

i.e. when type c bits can be read, the a new type of bit, equivalent to three ‘c’ type bits, can be processed:

Taking the extreme case where each cycle increases the bit granularity:

After step 3, step 1 = 4 c bits [1 a bit ], step 2 = 2 c bits [1 b bit], step 3 = 1 c bit. The system can now process a bit of type a’ = 3c bits.

Assuming a fundamental basebit (e.g. planck scale), an ‘a’ type bit might be 10^(10^23) basebits.

Systems could self configure for a massive amount of potential information exchange, over time.

6. Because the granularity of bits that can be read increases as powers of 2, the number of possible messages that can be read tends to increase exponentially.

This mimics learning as we usually encounter it: the more we know, the more we can know, and shows how entropy measures may vary according to the measurer. (A child might understand a allegorical story at face value, i.e. she would gather less meaning).

3. From systems consisting of multiple bits to living systems

(a) Message Exchange and Increase in Complexity

Imagine that the earth is like a giant glitter ball, covered in a mosaic of tiny white tiles. Now imagine that there is a threshold of light where as the sun shines on each tile, it is flipped, such that it turns black.

As the earth spins, the effect of the suns rays sweeping over the tiles is such that a ripple of a line of tiles flips from white to black and then black to white, continuously, unchanging and with a clock cycle determined by the time it takes for one revolution. This is the same as the one dimensional Cellular Automaton model in (1) above, except that the light and dark are represented by flipping tiles rather than shadow, and the edges of the grid meet each other. In neither case is any information stored, or any ‘calculation’ made, in this primitive cellular automaton, since it merely reflects the incident sun.

Now imagine that there is a spot on the sun, or noise in the environment , or an intermittently faulty flipping mechanism for a single tile (i.e. noise in the sun, the universe or the earth – an inevitability). We now have a system which, depending on the geometry and physics of the flipping threshold, will behave like a cellular automaton.

The physics governing the flipping threshold and tile arrangement will define the rule for whether any particular tile flips, based upon the previous state of neighboring tiles, and there are a finite number of these rules. However, a different rule operating on one particular initial configuration may produce the same state change as the original rule operating on a changed initial condition. If there is noise in the system, which creates uncertainty in the measure of the initial condition, this is equivalent to a fixed initial condition and cycling through possible rules.

It has been recently proved that a simple 2 color, 3 state Cellular Automaton can be a Universal Turing Machine while with the ability to cycle through rules and a particular initial condition applied, a 2 color 1 dimensional CA can be P-complete (Rule 110). if rules were allowed to cycle through at the appropriate rate that a particular CA persisted long enough to pass through enough calculations per rule change to self replicate, then it would allow for information to be persistent while evolving complexity. (This proof however seems to apply to a CA with an infinitely expanding grid).

What could cause necessary, mutation-like, imperfections?

The description below shows how the effects of analog noise on the system would allow an uncertainty in the initial state of a Cellular Automaton, which could cause imperfections to kick start information exchange. Since information can only be exchanged in discrete chunks, (no bits, no information), but a collection of unmeasured things will appear fuzzy and uncertain, it seems reasonable that any environment will appear as a continuum which collapses into bits when there is interaction between a system and its environment. Interaction will result in information being resolved into different chunks or bits, with varying probability, based upon the relationship and past history of information exchange between a system and its environment. Observable but unresolvable or unresolved information will appear fuzzy and continuous. The noise in a system may be the sum total of all systems which are currently unresolvable (as messages are exchanged, the noise may turn out to be readable), and the environment may be all systems which are unresolved.

Self Evolving CA in a ‘Relative Framework’:

Self Evolving CA means: Cellular Automata which cycle through all possible rules governing them, due to noise in the interactions between each other or the environment.

Relative Framework means: an environment that is a big multi-dimensional grid (of cells in a phase space, where the grid looks different, is distorted, depending on the viewpoint of the observer. There is no fixed, observable, background independent phase space, because the people doing the observing are within the same grid).

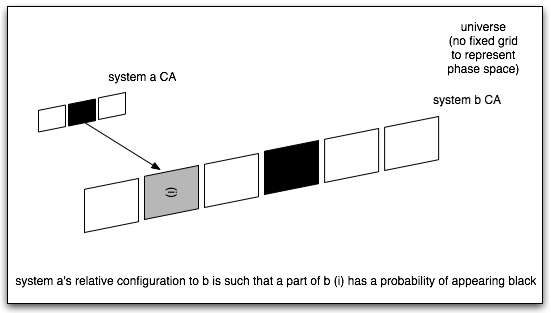

Above is model of a self evolving CA – i.e. one which cycles through states and the rules which govern states:

The three cells shown in system (a) and the left of system (b) are the same locations in a phase space, but viewed from a different ‘frame of reference’. There may be no absolute phase space, and co-ordinates may always be relative to a measuring system.

The relative relationship between the cells in system (a)’s local phase space are such that system (a) creates a percentage probability of any location being in the same state in system (b), (shown here as gray).

This creates a chance probability of (i) being computed as black in (b) (equivalent to noise in the reading).

IF system (b) is a cellular automaton of rule x, if the state of (i) appears black, this has the equivalent outcome to (i) being white but, but a different rule being applied.

If the noise fluctuates, then eventually (b) will cycle through all possible rules, some of which might constitute a CA which is a Universal Turing Machine.

In a large ‘soup’ of such systems, one particular type of cellular automaton will dominate, over time. A dominant type of Universal Turing Machine will arise from systems which interact such that the noise is tuned so that the ambiguities in states (and therefore rules) allow cycling through the rules at a rate which is slower than the number of state changes (cycles) required for the emergence of self replicating systems. In other words systems which are both self replicating and universal calculators will dominate, if they are possible.

Once there exist systems with multiple bits, various types of systems naturally evolve, providing there is noisy information exchange between systems of finite size (variable inheritance within a constrained environment). These systems culminate in the type we refer to as living, in which self-replication creates an enormous diversity of systems which evolve by natural selection as a result of the laws governing noisy information exchange between finite systems.

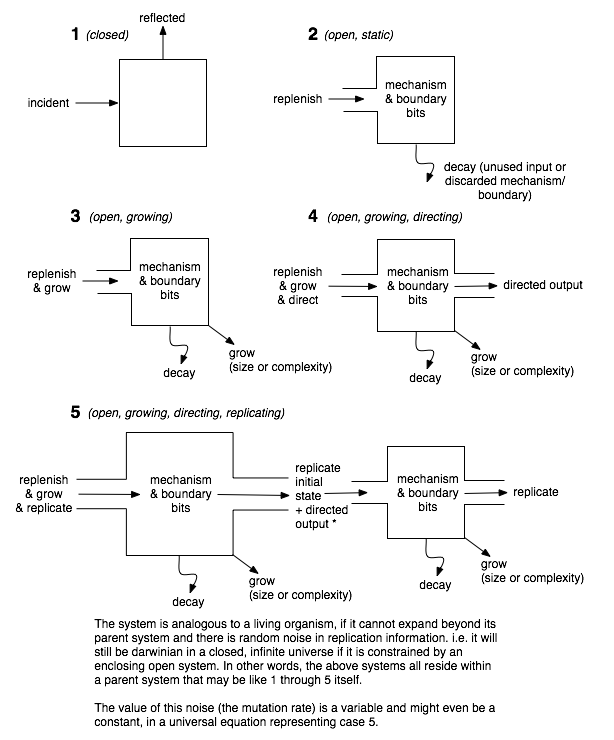

Living systems are a particular type of system within a hierarchy of different types. Some examples of real world systems that correspond to the schematic representations of some of these, which are shown below, include:

Type 1: ‘Billiard ball’ like particles and elastic collisions, representing thermodynamic interaction, where each type 1 system is particle

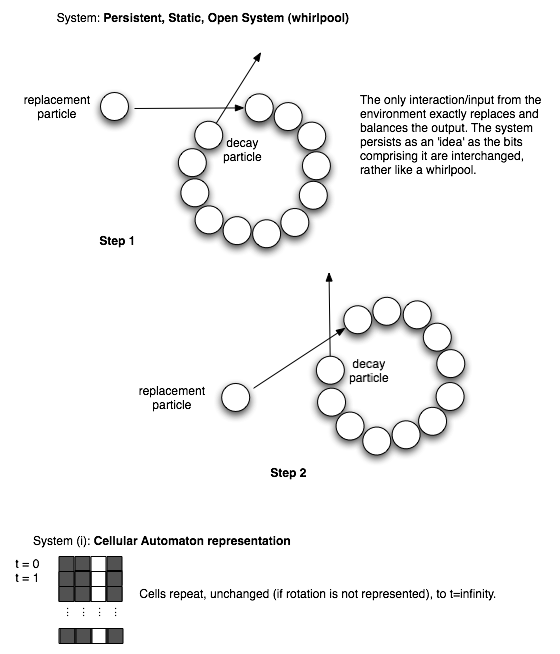

Type 2: A whirlpool

Type 3: A universal cellular automaton

Type 4: A universal Turing Machine

Type 3: A living organism

A more detailed example of a type 2 (open, static) system:

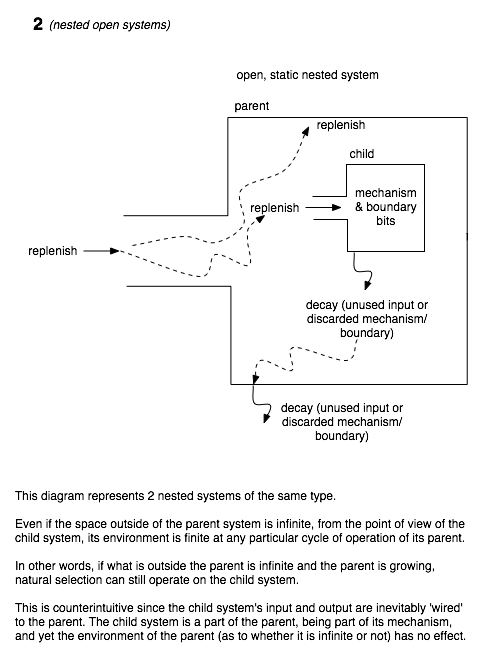

Nested systems: (The environment need not be a closed system)

The diagram below shows a schematic model for how any type of system may be nested, such that Information measures are only relative to the immediate parent system, which need not be closed.

Conclusion

The pseudo-logic vis a vis link between information exchange and evolution is as follows:

Hunch: Natural selection is single law that allows other laws to emerge. It would be very strange if such a fundamental meta law were limited to biology rather than physics or ultimately mathematics.

Axiom: Where energy can flow it will flow (i.e. there are changes in state over time, between systems across energy gradients, resulting in increased entropy)

How energy flows is defined by the laws of physics (i.e. there are rules)

Where there are rules, the next state of a system is dependent on the previous state (i.e. there is inheritance).

The definition of a ‘system’ infers a boundary, (i.e. systems are somewhat finite)

Information entropy (Shannon) and heat entropy are equivalent, to avoid Maxwell’s Demon paradox (i.e. energy flow between system is ultimately governed by mathematics)

No system, except perhaps the universe as a whole, is fully closed, therefore any information exchange between two systems is imperfect due to the effect of the environment. (i.e. all systems are subject to noise, Noise creates variability in the inheritance of rules or mutation)

Variable (mutation) rules (inheritance) in a finite system (competition) provides a framework where the variables of natural selection necessarily operate. I.E this is all you need for an information theory model of natural selection.

Where there is information exchange, natural selection is a consequent feature. Low entropy open systems are the plumbing which maximize the rate of dissipation of energy so that the second law of thermodynamics holds for the closed system. Systems such as cascading ‘gears’ in turbulent flow are a primitive form of self replication. We do not consider these to be living things because they disappear without evolving. A living eco-system is merely a very long lasting form of dissipative open system, but the difference between simple open systems like whirlpools and complex ones like creatures, is continuous. Given enough time, any open system in an energy gradient will evolve.

Although the above description claims to show how systems might self-configure for transfer and lead to complexity, there is a logical flaw.

Item (2) describes a model for ‘learning’ which involves an increase in bits due to bits being cleaved in two after each message exchange.

Item (3a) describes how noise in a information channel (due to the environment or incomprehensible information from other systems) allows for complexity to arise via the cycling of rules within Cellular Automata due to ambiguity in message transfer.

There is a fundamental difference between these two descriptions:

(2) describes a system where the ‘bit space’ itself increases whereas (3a) assumes prior existence of one (using the tiles on a glitter ball analogy). In item (2) the number of bits doubles with each message exchange which means the number of bits increases massively, whereas (3a) allows for all possible configurations of message within one space (i.e. there is a logarithmic relationship between the two). I suspect that (2) is correct and that (3a) is an abstraction which can be mapped onto it, by assuming that a particular bit space configuration applies for (3a).

A ‘relativistic’ description of message exchange would describe the message space itself in terms of the relative difference between two systems rather than against a fixed background. The message space itself may be a function of the relationship between two systems and their history of message exchange. In other words, the message space would describe the size of each ‘bit’ and this could change over time. If multiple interacting systems were considered then each pair of interactions between any two systems would be defined by its own ‘bit’ type, creating a type of currency exchange between bits from different system pairs with no one currency being the default. In the complex interactions of every day life, the relative differences between bits may be largely unnoticeable, for familiar communication or interaction and obvious for unfamiliar communication between 3 or more systems (such as a a child an adult and a book).

*[There is a further complexity – that doesn’t apply here. Systems and the exchange of information between them are macroscopic features relative to an observer, and their visibility and perceived interaction may be dependent on the sophistication (information state) of the observing system]