admin

On the left: Swiss poster demonizing Islam, to support the ban on minarets. On the right: Swiss poster proclaiming an understanding of Arab culture, soliciting funds for a private bank.

The controversy around the Swiss racist anti-minaret poster was understandable – it’s in the style and colors of pre-war Nazi propaganda for heaven’s sake. But Swiss racism is more complicated than it would first appear, particularly when it comes to money.

Anti Muslim sentiment in French speaking Switzerland in particular, is possibly stoked by irrational fears of poorer French immigrant Muslims crossing the border to seek work in prosperous Geneva from its French hinterland. The reality of Geneva is that it is culturally quite Arab, even if the Swiss would deny it. The world’s most expensive watches and private banking make Geneva a playground for oil rich Middle Easterners, a cliche – but true. and to prove it, these type of Muslims are welcomed at the airport, by posters in Arabic proclaiming an ‘in-depth understanding of Arabic culture’, with little sense of irony.

My son likes Shaun the Sheep, a cute character created by Wallace and Gromit’s Nick Park. Yesterday’s trip to a petting zoo revealed the diabolical, moth-eaten, nightmare-generating monster on the right, which threatens to leave him mentally scarred forever.

[Interestingly, he just pointed to the thing on the right and said ‘Shaun the Sheep’. Which is like pointing to Jabba the Hut and saying Leia. ]How do you bump into new people in a social network?

As an example, I became an architect (something that contrary to popular misconception is largely an art not a science), having originally come from a scientific background. People who are scientists often look for a description in words as to why a work of art is interesting, its why rather uninteresting artists like Escher are nerdy favorites – the idea is more interesting than the picture. I started architecture by producing designs that all had a story but weren’t visually original, but I remember the day at architecture school when I really ‘got it’, when I was able to design something new rather than the kind of superficial gimmick that a rational approach to design always produces. The way that I designed something original was by accident – I created enough mess around me that I made a mistake that actually turned out to be interesting.

The online world is more artificial than the real world, by definition, and therefore social interaction can be stilted and somewhat unsatisfactory, even if it can span the globe instantaneously. From flame wars to the fact that sarcasm rarely goes down well online, the online experience of communication is not quite as interesting as the real world, its a bit like living in Geneva rather than New York.

Perhaps there is something to be learned from Geneva rather than New York when it comes to finding out how to create serendipity in a more artificial social environment? In which case, the thing to look at might be golf. Intermingling in Geneva is largely through sporting activities in the mountains and on the lake, and as elsewhere, on the golf course.

Computers used to occupy a whole floor of a building, then a room, a desk and ultimately a lap. The laptop is the default form factor for computing, with the smartphone occupying an emerging niche for on the go. But while the laptop replaces the desktop in most cases, the smartphone doesn’t replace the laptop, but is something to have in addition to it.

The tablet will replace neither the laptop or the smartphone, so matter what the hype, it is destined to be an ancillary form factor for computing devices.

[ The Kindle just isn’t cool. I’m a Tablet. And I’m a Kindle.]

That being said, the tablet will fill the niche that the Kindle aspires to. It’s an area that generates lots of press, because its the one occupied by print media itself which is currently delivered via the medium of dead trees. Its an anachronism that highlights an obsolete business model looking for a savior, but the Kindle isn’t it. Amazon’s device placed its bet on an irrational design choice which was based on thinking about dead trees rather than what’s on them, e-paper vs a screen, a product decision which removes more functionality than it adds. The current state of the art E-paper renders the Kindle a black & white, video-less computer that you can’t read in bed without the light on. The Kindle is a bad design because it focuses on the medium (paper) rather than the message ( video, sound, color). Electronic books, magazines and newspapers need not look like their paper equivalent, especially the drab black and white variety, they will have color and videos and will look much more like a web site than a dead tree. Apple know this and they will crush the Kindle.

The tablet will be positioned as the ultimate media reader, it will kill the Kindle near instantly by focusing on what the Kindle tried to, but wrongly – the screen. To differentiate reading the Vogue website on a laptop from reading Vogue on the tablet, Apple will arrange custom sites and deliver a device with a screen resolution and quality never seen before. Apple will deliver something with the interaction of a website and the seductiveness of a glossy magazine. It will offer syndicated, tablet-enhanced content and will be hailed as the savior of an entire industry.

But perhaps the notion of a tablet as an e-reader misses something much more interesting? Newspapers and magazines are not that interesting – despite the business model problems which create a lot of noise, magazines and newspapers already have a savior: common or garden websites. The fact that these website have different economics that traditional media doesn’t like is tough luck. Whatever Apple tries to do, a tablet site will basically be a pretty website, and by following the iTunes Music Store or App. store model, unlike with music or software, Apple will be taking something that is already legally available on the web and corralling it into a controlled, walled-garden environment under the yet-to-be-proved auspices of value-add.

In terms of hardware, the tablet might offer something qualitatively superior but it won’t offer much that a laptop doesn’t already. In fact, without a stand, or a keyboard that can be used with two hands while holding it, it could be regarded as a willful, unergonomic gimmick, something based on the idea that a digital newspaper doesn’t look right with a keyboard. This is the UI of science fiction movies, not the real world, nonetheless, such purity will play into the hands of Apple expertise and the tablet will no doubt be an extremely minimalist and elegant device to lust after.

But it could be the seductive purity and minimalism of the device that may cripple its true potential, if it doesn’t do what tablets traditionally offer beyond ordinary devices – allow you to draw with them. The problem is that drawing on a tablet would require stylus input (or at least using a regular pen) and the whole ethos of the iPhone generation Apple interface is geared around using a finger instead. Jobs famously stated ‘now what’ after dropping a stylus with an iPhone prototype and that lead to the undoubted elegance of not needing to carry around a pen, when a finger will do. Unfortunately, fingers aren’t good for painting unless you are a three year old.

[Unfortunately Pen Computing has been Historically Uncool, But it Doesn’t Have to be That Way]

Why is pen based drawing so important, isn’t that a niche requirement for CAD using architects or Photoshop and Illustrator wielding graphic designers? And anyway, few people have either the inclination or ability to draw, even if they have the tools to do so.

Touch based computing isn’t just a feature, its a fundamental shift in the way we interact with a computer and it represents as big an interface development as the transition from the command line to mouse & icon. With a mouse, interaction is remote and clunky, but with touch, it is direct and allows precision and subtlety, through gestures. This is where the iPhone version of the Apple OS represents the way forward for all devices, and why it will run on the tablet. But if the tablet shows off the difference between it and a laptop through the precision of a wonderfully high resolution screen, surely the precision of input offered by allowing you to draw with it using a pen would open up unknown potential, taking drawing based UI from niche to mainstream.

Sadly, drawing will be perceived as just that, niche. The inelegant Palm Pilot-like connotations of stylus input will make it very unlikely that considerable design effort will be applied to making sure an already beautiful and precious screen doesn’t break when you apply 50 times more pressure on it than a finger, by using a fine point.

A tablet is in many ways laptop without its own keyboard or stand and there isn’t much that you can’t in theory do on a laptop that you can on a tablet – apart from draw. Drawing with a pen is the one thing a tablet is made for, that can’t be done comfortably on the tilted screen of a laptop and it would surely be the thing that opens up genuinely new avenues for undiscovered applications, rather than reading a newspaper.

[The original Apple tablet]

Sadly the future of a tablet for drawing with may rest with the long forgotten Newton. I hope I’m wrong.

In terms of geo-politics, the last decade was one of immense significance, but culturally it was an era that was so artistically bland, that it had no name till it was almost over. Until 2009 almost nobody referred to the noughties.

1. The event of the decade – Global Warming as Fact

Bookended by cataclysmic events, the noughties started with two of the worlds tallest skyscrapers in New York’s financial district being blown up in the name of God and ended with the castration of Mammon and the simultaneous failure of the US banking system, its largest mortgage companies, insurers and car manufacturers. The former was so visually extreme it would have seemed ridiculous as Hollywood fantasy, the latter so ideologically challenging that it would seem ridiculous as New York Times fiction. The Towering Inferno and Bonfire of the Vanities had been quenched by reality.

Both these things were signals of something of longer term significance: resource wars in the Middle East and a challenge to Western hegemony from the Far East, secular changes which will determine the course of the rest of our lives. For optimists, however, the problems caused by both are solvable via continued prosperity through growth and innovation.

But the event that really defines this decade, the parade-pissing, motherfucker of all events, was the realization that prosperity could actually be the problem not the solution. Despite antegalilean tabloid sentiment, the noughties were the decade when global warming was confirmed by scientists as fact, just as the earth orbits the sun. Global warming is a problem that could actually be exacerbated by growth and as such is the worst thing to happen to humans since fleas on medieval rats.

2. Art – For The Love of God, Damien Hirst

Nothing defines the decade in more compact form than this diabolically expensive piece of shit. It’s almost impossible to think of anything more disgusting than a diamond encrusted skull, it combines the graveyard exploitation of a human skin lampshade with the ostentatious vulgarity of a gold plated toilet. It didn’t sell, so unfortunately there isn’t a single Russian gangster or Connecticut hedge fund manager to crucify for purchasing it. Instead, it belongs to them all, the people who took tainted money and unimaginatively tried to launder it, by buying taste, via a largely obsolete but prestigious medium – gallery art. Who knows, perhaps Hirst was indeed joking, in which case this was genius rather than an ironic, decade defining, atrocity.

3. Movies – The Fog of War, Errol Morris

Since Being John Malkovitch is technically from the previous decade, Adaptation would have been a worthy choice here. The complex, surreal fantasies of Charlie Kaufman and Spike Jonze or to a lesser extent Michel Gondry, created something truly original and groundbreaking, with enough clever-clever self-awareness to satisfy a conference on Post-modernism. But I’m choosing the opposite, a superficially ordinary and simple movie of an interview with a man in a suit. The Fog of War is an historically important confession from a dying man, Robert McNamara. It’s a film that looks simple but hides a subtle complexity which could only have been pulled off by someone of the caliber of Errol Morris and for all the contrived cleverness, Kaufman and Jonze couldn’t dream up something so morally and intellectually challenging as this interview. In the tiebreaker between Adaptation and The Fog of War, fact beats fiction at its strangest.

4. Celebrity – Sex Tape, Paris Hilton

Is celebrity a cultural category? Yes, if celebrity is something in its own right, celebrity for its own sake. The decade with no name until 2009 had plenty of Frankenstein-like tabloid creations: from two-headed monster, Branjelina, to bald-headed train wreck, Britney. But above all, Paris Hilton epitomized someone who was famous for no other reason than fame itself, a talentless circle-jerk of celebrity, catalyzed by fucking on camera in front of millions then whored out to TV stations that can’t show a single piece of this piece-of-ass ass’s ass.

5. Food – The Cupcake.

Cupcakes are the hamburger of deserts – a portable, sandwich-sized item that can be eaten in the car or on the street without cutlery. There’s a big difference between burgers and cupcakes, however: a good burger is a great, delicious and manly thing, whereas cupcakes are children’s food. They are to Laduree macaroons what spam is to filet mignon, the most boring of cakes – sponge, whose ordinariness is concealed by its look rather than flavor, using toppings of different colored icing. Appropriately enough, the transition of cupcake from boutique to global was triggered by extended pajama party, Sex and the City’s visit to the Magnolia Bakery in New York’s West Village and for most of the noughties a line of bleating humans has extended from its entrance to somewhere several hundred yards away. The length of this line could act as a barometer of the sugar coated, let-us-eat-cake, reality-denial of the noughties. As the ripples of the great recession seep through every crevice of society, turning bakery cake lines to soup kitchen lines and the mood from denial to anger, perhaps – hopefully, it will wither.

6. T.V. – The Wire, HBO

I haven’t had a TV for most of the decade (by accident rather than design, and not because I’m a snobby intellectual ponce – I love TV) so I’m going to be completely dishonest here and pick something where I’ve only watched a part. I’ll rely on the better judgment of friends such as Jason Kottke and the fact that almost everything that I’ve seen on TV in that last ten years that has been good has been on HBO. While the BBC rested on its laurels and became victim of the endless Simon Cowellesque vaudeville that renders TV less interesting and unpredictable than watching people play Guitar Hero when its not your turn, HBO demonstrated that the length and pace of an extended TV series allows for superior character development and depth of plot than a movie. Perhaps this was the point where TV overtook film to become the medium where the best talent operates.

7. Internet – Flickr.

Internet applications are rarely designed – marketing departments communicate directly with engineering, rather like developer driven architecture, where the architect is employed by the contractor. It used to be that deliberately crippled UI was considered a virtue, this could apply to the arguably elegant minimalism of Craigslist to the complexity of Wikipedia which self-regulates against uncommitted publishers or the Horrendous anti-design of Myspace which was supposed to be less off-puttingly elitist. Facebook put that theory to rest with its modernist style and attention to detail, but Flickr was the first popular web application that was really well designed. This was largely to do with the founders, Stewart Butterfield and Caterina Fake who defied the stereotype by being both geeky and urbane. Similar to Vimeo, the beauty of the application has influenced the content and Flickr has become a source of stunning photography. Flickr was the first mature Internet application.

8. Books – People don’t Read, Steve Jobs.

People should listen to Steve Jobs, he might be the one, the messiah, all his products start with ‘I’.

My choice for the book that defined the decade is no book, and Job’s infamous statement that people don’t read.

“It doesn’t matter how good or bad the product is, the fact is that people don’t read anymore,” he said. “Forty percent of the people in the U.S. read one book or less last year. The whole conception is flawed at the top because people don’t read anymore.”

Jobs was referring to the Kindle, which is by all measures a success. However, I suspect that jobs is right, Job’s ‘iSlate’ will surely be a multi-media device based on the fact that a black & white, video-less gadget which you can read a paperback on but can’t properly browse a website, makes the Kindle a loser in the long run. People read, they just don’t read books. iSlate will be bigger than the Bible.

9. Architecture – Nothing in Particular, Zaha Hadid.

The architecture of the last decade was epitomized by ‘funny shaped’ signature buildings by signature architects where brand took precedence over substance, like a signed picture without a drawing. Its origins were in the fragmented splintered shapes of deconstruction, but ended up in more fluid, organic, double-curved forms that were previously the exclusive domain of product and car design. The architects that defined this style predated the trend or the computer modeling that allowed it to become a built reality rather than something that only existed in drawings; in the picture above, showing Hadid’s weird collaboration with Lagerfeld for Chanel, the designers themselves look like they are computer generated. This style of architecture became popular because it fitted the niche created by a speculative bubble. A building with an unsubtle, unusual shape, but boring floor plan and crude detailing has maximum impact for minimum design effort and can be done quickly. Zaha Hadid was once great – as a paper architect, but this is the style that Dubai made possible, it defines the decade architecturally, and history may not be kind to it.

10. Music – Killing in the Name, Rage Against The Machine

For 2009, the coveted UK Christmas Number 1, which had been dominated by winners of the reality TV talent show, the ‘X-factor’ for four years running, was won by Rage Against The Machine after a grassroots campaign organized on Facebook. As the traditionally saccharine festive season rings out with ‘Fuck you, I won’t do what you tell me’ rather than an orange tanned, pre-pubescent with super-glued hair in a zoot suit and flared collar white shirt singing ‘grandma I love you, you’re swell’, to the tune of Nessun Dorma and a cash register ringing up, perhaps Santa is real after all. The next decade is going to suck, but it will have a better soundtrack.

Goodbye to cupcakes, and X-factor and Paris Hilton and Dubai tower blocks, and all that.

Chas A. Egan, Charles H. Lineweaver calculated how run-down the universe is as things disappear into the ultimate run-down state of black holes (where you can’t do any work with what gets sucked in). Unlike previous calculations, they included freakishly big ones ( a billion times the mass of the sun) rather than the average (10 million times the mass of the sun) which account for most of its run down-ness: entropy.

The new results for entropy in Boltzmann units are: early universe (10^88); now (10^104); maximum (10^122).

(BTW entropy units are joules per kelvin – can someone remind me are the units of temperature itself, energy, or is it unitless)

Ron Cowen has a good summary of the paper at Science News, where he mentions the following:

“Entropy quantifies the number of different microscopic states that a physical system can have while looking the same on a large scale. For instance, an omelet has higher entropy than an egg because there are more ways for the molecules of an omelet to rearrange themselves and still remain an omelet than for an egg”.

Entropy is surely about macrostates vs microstates, if we had a name for a particular arrangement of molecules in an omelet, it would have high entropy. We don’t, there are many ways to smash an egg and still call it an omelet, so they are low entropy.

Does this mean that entropy is meaningless? No, but it means that it is relative to a particular system. The amount of potential work that can be done by system A on B depends not on some absolute measure of the free energy of A, but how much of that free energy is useful to B.

There is a relationship between the energy exchange between systems and information exchange, so the above paragraph could describe a relationship between two systems where the meaning of A was not absolute, but relative to B. One person’s high entropy omelet is another persons’ very special low entropy omelet with a name.

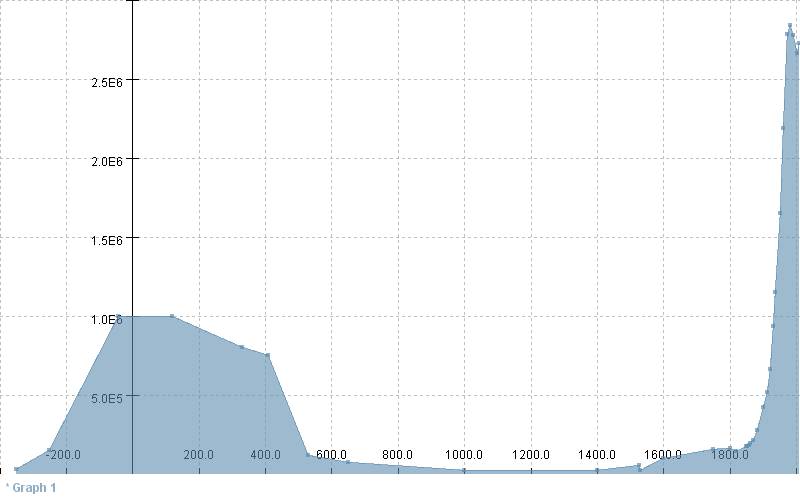

I plotted a graph of Rome’s population through history [source]. Some points: the rise and fall of Ancient Rome was roughly symmetrical (compared to the rapid decline of societies such as Greenland in Jared Diamond’s ‘Collapse’); the population during the Renaissance was miniscule (yet it was still a global center), when Michelangelo was painting the Sistine Chapel it was considerably smaller than a town like Palo Alto is today (60K); Rome at its nadir was about the size of Google (20K employees); the growth of Rome during the Industrial era is much greater than the rise of Ancient Rome.

I’m not sure what the population of Rome’s hinterland would have been in ancient times, but assuming that present day Rome is more sprawling, the 4 million inhabitants of greater Rome would perhaps show the post industrial city’s growth as being even more extreme than its ancient counterpart.

Not entirely facetiously, note that the extended period of decline and relative stagnation between 400 and 1500 roughly corresponds to Nicaean Councils of the 4th century and the Copernican revolution of the mid 16th, events which stake out what could reasonably be called the Christian period, (or partially, at least, the Muslim one, depending on your perspective).

Ireland has just created legislation which will make it illegal to blaspheme.

“Blasphemous matter” is defined as matter “that is grossly abusive or insulting in relation to matters held sacred by any religion”

Given that parts of the Koran directly accuse Christians of blasphemy, that all the Abrahamic religions are guilty of blasphemy to each other, and that almost all religions are blasphemous to someone, the blasphemy law is clearly farcical. The Bible and the Koran are now potentially illegal in Ireland, beacuase of a law designed to protect their followers.

Dawkins claimed the law was a return to the Middle Ages, however in that period it would have made some sense since blasphemy would have only applied to the one religion, yielding less self-contradiction.

In short, Irelands blasphemy law is designed to protect only opinions which are held without reason against unreasonable opinion. Tenets held because of reason rather than faith are not protected, because they are not religion.

Ireland now has a law that says that:

(i) if enough people say black is white and are

(ii) offended by the opposite,

(iii) providing its a belief based on superstition not evidence,

(iv) it is illegal to tell them otherwise.

Memetics evangelist, Susan Blackmore has a piece in New Scientist which suggests that replicated information in computers is distinct from memes, and therefore something new altogether.

“Evolution’s third replicator: Genes, meme, and now what?”

Biologist Larry Moran calls this pseudoscience.

Forget about the fact that I was talking about something similar to Blackmore’s ‘third replicators’ in the post below (it was under the tag ‘half baked ideas’, a non-scientific ramble), one of the problems with the idea of memes is that it imagines that memes are somehow different from genes. This opens up the inevitable possibility of a zoo of gene-like things as Blackmore suggests and Moran takes issue with.

At an abstract level, genes contain information, so do memes and Blackmore’s ‘third replicators’. One of the defining features of information is that it can be stored in different languages or media.

A better way to look at memes might be that genes are a particular flavor of meme instead of the current notion that memes are a by product of intelligent gene-based organisms

New Scientist has a piece on re-assessing economics after the crash.

I’ve a half-baked idea here, that is, one the one hand, obvious, but I haven’t seen spelled out anywhere: that free-markets are prone to viruses.

Clearly some memes propagate despite the fact that they aren’t true: astrology; homeopathy etc., the memetic equivalent of viruses.

Much of free-market ideology is based upon application of darwinian ideas to economics.

Therefore, even if free-markets do evolve naturally under natural selection, not all market forces will be benign, there could be the free-market money equivalent of viruses. I’ll call these ‘benes’ (as in bean counter, slang for accountant).

These benes could be merely be specific memes to do with money – such as a speculative or panic rumor, or indirectly such as businesses based on memes e.g. horoscopes. But they could equally be financial instruments, business practices or business environments themselves that are attractive and therefore become widely used, but are damaging to the free market that creates them. (An example of the latter might be lobbying, where businesses such as Verisign, for example, protect a monopoly over .com domain names more effectively by seducing legislators than being competitive.) These are somewhat different from memes since they become much more like organisms, with a life of their own. In some cases, like the SWIFT transaction system or retail bank buildings, they arguably have a phenotype.

The idea that capitalism is prone to periodic viral infection through benes, seems like an obvious thing to investigate. It also creates a middle road between the doomsayers that claim that macro economics is dead and the libertarians who say that it would have been OK to let everything fail. The message: ‘Capitalism works overall, but be careful’. Just how careful, might be something that could be ascertained scientifically.

I never quite understood the beef between Dawkins and Gould over punctuated equilibrium, however the notion that just because species flourish at different rates does not mean that DNA mutation does. (I need to double check to see if that was Dawkin’s point. )

To illustrate this consider a sand pile and the mini-avalanches that happen as sand is poured on the top at a constant rate. The rate of the pouring of sand may be constant but the avalanches will be varied – some big, some small, following a power law distribution.

In evolution, a constant rate of change to genotype may create periods of rapid change and periods of little change in phenotype – punctuated equilibrium. The gradualist evolutionary mechanism of neo-Darwinism is not challenged by this.

(update – am checking the Gould vs Darwin debate – the literature is not very succinct, surely I don’t need to read an entire book to see what the exact difference of opinion was?

It seems to be this: Dawkins figures that all complexity at the level of species, how they interact appear and disappear within a changing environment can be explained by natural selection operating at the level of genes. There doesn’t seem to be a simple explanation of what Gould thought (perhaps that’s why there are no 3 line explanations). My instinct is that Dawkins is right and analogies abound in terms of simple processes producing complex interactions – like the 3 planet motion).